8th January 2026

Estimated reading time : 10 Minutes

Data Annotation in AI: What It Is, Why It Matters, and Real Examples Explained

What Is Data Annotation?

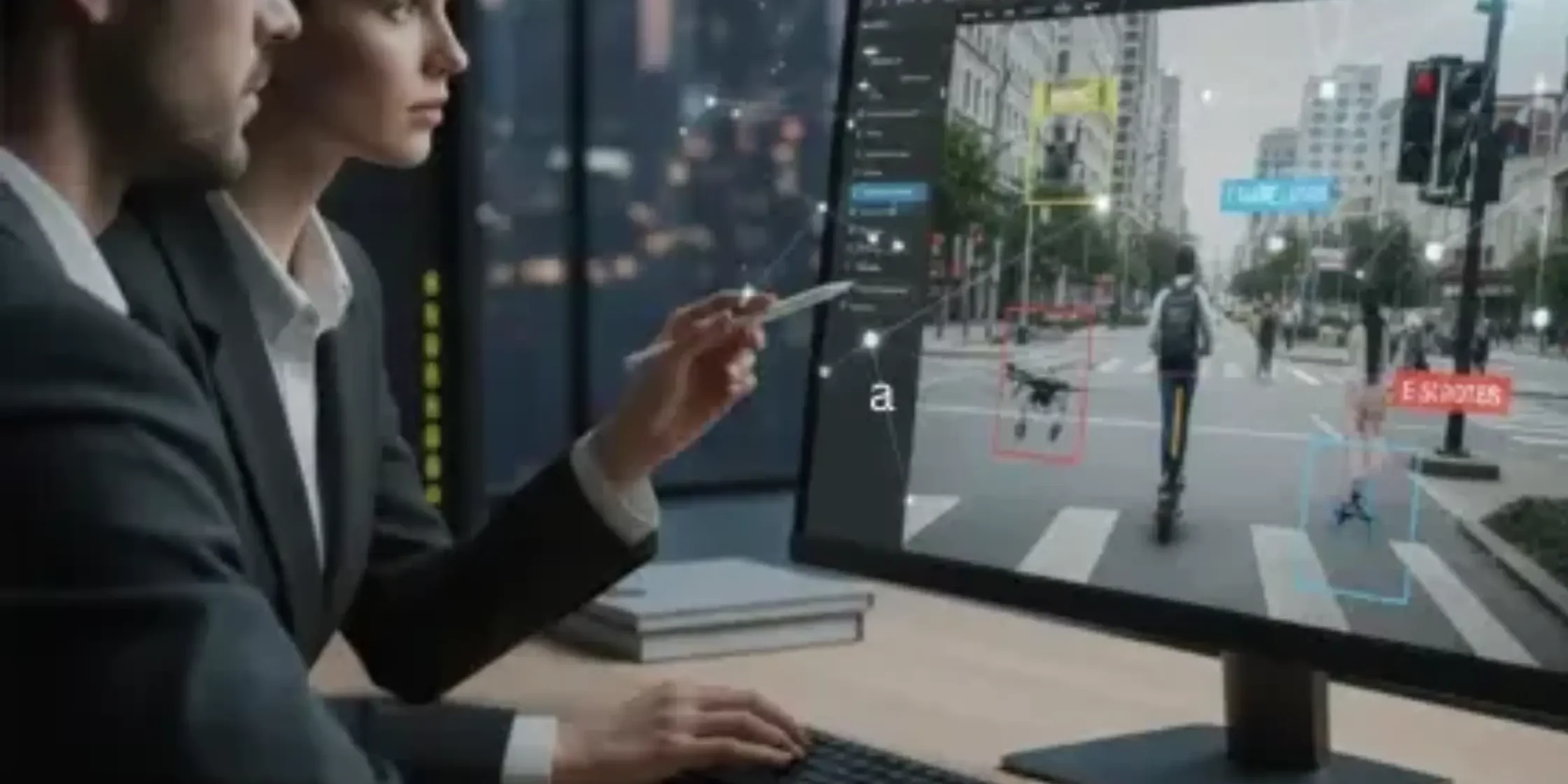

Data annotation is the process of labeling, tagging, and categorizing raw data to make it understandable and usable for machine learning algorithms. Think of it as teaching a child to recognize objects you point to a dog and say “dog,” to a cat and say “cat.” Similarly, data annotation involves identifying and labeling elements within datasets so AI models can learn to recognize patterns, make predictions, and deliver reliable results.

Without annotation, AI models would struggle to make sense of unstructured data, unable to distinguish between a pedestrian and a lamppost, or identify a tumor in a medical scan. The process transforms meaningless pixels, text, or audio waves into structured, high-quality training datasets that algorithms can interpret and learn from.

Types of Data Annotation

The annotation landscape encompasses various data modalities, each requiring specialized techniques:

Image Annotation: Drawing bounding boxes around objects, creating polygon segmentations for precise boundaries, labeling semantic segments pixel by pixel, and identifying keypoints for facial recognition or pose estimation.

Text Annotation: Identifying named entities like people, organizations, and locations; analyzing sentiment in customer feedback; recognizing intent in chatbot conversations; and establishing relationships between different text elements.

Video Annotation: Tracking objects across frames, annotating actions and events, segmenting scenes, and labeling temporal sequences for activities.

Audio Annotation: Transcribing speech to text, identifying speakers, recognizing emotions in voice, and classifying sounds for various applications.

3D Annotation: Creating 3D cuboids for spatial understanding, annotating LiDAR point clouds for autonomous vehicles, and labeling sensor fusion data combining multiple data sources.

The Explosive Growth of the Data Annotation Market

The data annotation industry is experiencing unprecedented growth, reflecting AI’s expanding role across sectors. The market reached approximately USD 836 million in 2024 and projects growth at a 27% CAGR between 2025 and 2034, demonstrating the critical importance of quality training data.

The market is forecast to expand from USD 2.32 billion in 2025 to USD 9.78 billion by 2030, powered by surging enterprise demand for high-quality training data across generative AI, autonomous systems, and multimodal foundation models. This remarkable trajectory positions annotation platforms as strategic infrastructure rather than tactical vendors.

Key Market Drivers

Several factors are propelling this explosive growth:

Generative AI Boom: The rise of Large Language Models (LLMs) like ChatGPT and advanced multimodal AI systems requires massive volumes of precisely annotated data. Deloitte predicts that 25% of enterprises using GenAI are expected to deploy AI agents in 2025, significantly boosting demand for annotated training data.

Autonomous Vehicle Revolution: Tesla’s Buffalo, Palo Alto, and Draper centers process thousands of clips daily to train Full Self-Driving software, illustrating the scale and sensitivity of annotation work in autonomous driving.

Healthcare AI Transformation: The healthcare and medical data annotation tools market is set to rise to $1.1 billion by 2032, with an annual growth rate of 23.85%, driven by AI applications in diagnostics, telemedicine, and personalized medicine.

IoT and Big Data Expansion: IoT devices are predicted to number around 25 billion by 2030, all generating data that requires annotation for meaningful AI applications.

Why Data Annotation Matters: The Quality Imperative

The adage “garbage in, garbage out” perfectly captures annotation’s critical role. MIT estimates that up to 80% of data scientists’ time is spent on data preparation and labeling rather than actual modeling, highlighting annotation’s central position in the AI development lifecycle.

Impact on Model Performance

High-quality annotated data directly influences:

Accuracy and Reliability: Well-annotated datasets enable AI models to learn correct patterns and make accurate predictions. In healthcare, this could mean the difference between detecting a tumor early or missing it entirely.

Bias Mitigation: Diverse, properly annotated datasets help minimize algorithmic bias, ensuring AI systems make fair decisions across different demographics and scenarios.

Generalization Capability: Quality annotations help models perform well on new, unseen data rather than just memorizing training examples.

Safety and Compliance: In critical applications like autonomous vehicles and medical diagnostics, annotation quality directly impacts human safety and regulatory approval.

Real-World Examples: Data Annotation in Action

Healthcare AI: Saving Lives Through Precision

Healthcare organizations are investing heavily in annotation infrastructure to unlock AI’s potential in diagnostics and treatment planning.

Radiology and Medical Imaging: The FDA has approved over 300 AI algorithms in recent years, with the vast majority relating to medical imaging, underscoring the field’s rapid advancement.

Medical annotation requires specialized expertise and tools:

- Tumor Detection: Annotating CT scans, MRIs, and X-rays to identify cancerous lesions

- Organ Segmentation: Precisely outlining anatomical structures for surgical planning

- Pathology Analysis: Labeling cellular structures in microscopy images

- Anomaly Identification: Marking abnormalities for diagnostic AI systems

RapidAI reduced MRI and CT annotation time by 70% using AI-assisted labeling, while Stanford Medicine cut experiment duration from 21 to 4 days, demonstrating how advanced annotation tools accelerate medical AI development.

The complexity of medical annotation stems from several factors: strict HIPAA compliance requirements, specialized medical knowledge needed by annotators, complex DICOM and NIfTI file formats, and the high stakes of diagnostic accuracy.

Natural Language Processing: Understanding Human Communication

Text annotation powers the conversational AI revolution. Large Language Models like GPT-4 and Claude require extensive text annotation for training and fine-tuning.

Key NLP Annotation Tasks:

- Named Entity Recognition: Identifying people, organizations, locations, and other entities in text

- Sentiment Analysis: Determining emotional tone in customer feedback and social media

- Intent Classification: Understanding user goals in chatbot conversations

- Relationship Extraction: Mapping connections between entities for knowledge graphs

Text annotation is estimated to secure 70% market share by 2037, driven by increasing use of text-based annotations in chatbots and NLP tools. The explosion of text data from social media, customer service transcripts, and legal documents continues driving demand for accurate text labeling.

Computer Vision Applications

Beyond autonomous vehicles and healthcare, computer vision applications across industries rely on annotated visual data:

Retail and E-commerce: Target reached 96% automation with 99% accuracy in product-content auditing, translating labeling precision into higher conversion, showing annotation’s direct revenue impact.

Agriculture: AI-powered systems use annotated drone imagery to detect crop diseases, estimate yields, and optimize irrigation.

Manufacturing: Quality control systems trained on annotated defect images identify product flaws faster than human inspectors.

Security and Surveillance: Facial recognition and behavioral analysis systems depend on annotated video datasets.

The Annotation Process: From Raw Data to Training-Ready Datasets

Creating high-quality annotated datasets involves several critical steps:

- Data Collection and Preparation

Organizations gather raw data from various sources sensors, cameras, user interactions, or existing databases. This data must be cleaned, organized, and prepared for annotation.

- Annotation Guidelines Development

Clear, detailed guidelines ensure consistency across annotators. These documents define labeling standards, edge case handling, and quality criteria.

- Tool Selection

Organizations choose between commercial platforms like Scale AI, Labelbox, and SuperAnnotate, or open-source solutions like Label Studio and CVAT, based on their specific needs.

- Annotation Execution

This phase involves the actual labeling work, which can be:

- Manual Annotation: Human annotators carefully label each data point

- Semi-Automated Annotation: AI-assisted tools pre-label data, with humans reviewing and correcting

- Active Learning: Models identify uncertain cases for human annotation, optimizing efficiency

- Quality Assurance

Multiple layers of quality control ensure annotation accuracy:

- Consensus Annotation: Multiple annotators label the same data, with disagreements resolved

- Expert Review: Domain specialists validate complex annotations

- Automated Checks: Software identifies inconsistencies and potential errors

- Inter-Annotator Agreement: Statistical measures assess consistency across annotators

- Dataset Management

Properly versioned, documented, and organized datasets enable reproducible AI development and model iteration.

Emerging Trends in Data Annotation for 2025

The annotation landscape continues evolving rapidly. By 2025, it’s essential that organizations successfully manage and annotate vast amounts of unstructured data, including text, videos, and sensor data from expanding digital platforms.

AI-Assisted Annotation

AI-powered annotation tools are expected to reduce manual annotation time by up to 50% while improving accuracy. Machine learning algorithms learn from past annotations, continually improving quality and speed.

Generative AI is transforming the annotation workflow itself. Generative models are increasingly being used to pre-label data, which human annotators then refine, significantly reducing time and effort required for large-scale projects.

Multimodal Data Labeling

Modern AI systems process multiple data types simultaneously. Generative AI adoption is shifting requirements from single-modality images to complex combinations of text, video, and 3D point clouds, requiring annotation platforms that handle diverse formats seamlessly.

Privacy-Preserving Annotation

With growing data privacy concerns, new techniques enable annotation while protecting sensitive information:

- Federated Learning: Models train on distributed data without centralizing it

- Differential Privacy: Mathematical guarantees prevent identifying individuals from annotated datasets

- Synthetic Data Generation: Creating artificial datasets that maintain statistical properties without exposing real data

Real-Time and Edge Annotation

As AI moves to edge devices, demand grows for annotation tools supporting real-time data processing and on-device learning.

Ethical AI and Bias Reduction

By 2025, companies must adopt fair data sourcing and bias-reduction practices to ensure diverse, accurate, and compliant datasets. Organizations increasingly focus on representative datasets that work equitably across demographics.

Challenges in Data Annotation

Despite technological advances, several challenges persist:

Scalability and Cost

Annotation remains labor-intensive and expensive. Organizations must balance quality requirements against budget constraints and project timelines.

Quality Consistency

Maintaining annotation quality across large teams and extended projects requires robust training, clear guidelines, and continuous quality monitoring.

Domain Expertise

Complex applications like medical imaging or legal document analysis require annotators with specialized knowledge, limiting the talent pool and increasing costs.

Data Privacy and Security

Data privacy regulations like GDPR impose strict rules on how data is handled, stored, and annotated. Companies must ensure annotation practices comply with regulations to avoid penalties.

Annotator Bias

Human annotators bring inherent biases that can propagate into AI models. Diverse annotation teams and bias-aware guidelines help mitigate this risk.

Best Practices for Successful Data Annotation

Organizations achieving annotation excellence follow several key principles:

Invest in Clear Guidelines

Comprehensive annotation guidelines with visual examples reduce ambiguity and improve consistency.

Implement Rigorous Quality Control

Multi-stage review processes, consensus mechanisms, and expert validation ensure high-quality outputs.

Choose the Right Annotation Partner

Whether building in-house teams or outsourcing, partner selection significantly impacts project success. Consider expertise, scalability, security practices, and domain knowledge.

Leverage AI Assistance Strategically

Use automation for repetitive tasks while reserving human judgment for complex cases and quality assurance.

Prioritize Annotator Training

Well-trained annotators produce better results. Invest in comprehensive onboarding and ongoing skill development.

Maintain Dataset Documentation

Detailed documentation of annotation processes, guidelines, and decisions ensures reproducibility and facilitates model debugging.

The Future of Data Annotation

As AI capabilities expand, annotation’s role will evolve but remain central. Annotation quality now links directly to revenue impact, with platforms positioned as strategic infrastructure rather than support services.

The industry is moving toward:

Greater Automation: AI will handle more routine annotation tasks, with humans focusing on complex cases and quality oversight.

Specialized Expertise: Growing demand for domain experts who combine technical annotation skills with specialized knowledge.

Continuous Annotation: Rather than one-time dataset creation, ongoing annotation loops that continuously improve deployed models.

Standardization: Industry-wide standards for annotation quality, format compatibility, and evaluation metrics.

Conclusion: Data Annotation as the Backbone of Scalable AI

Data annotation has evolved from a support function into a strategic pillar of successful AI systems. From autonomous vehicles and medical diagnostics to conversational AI and computer vision, high-quality annotated data turns raw information into accurate, trustworthy intelligence.

As AI adoption accelerates, organizations that invest in scalable, ethical, and well-governed annotation frameworks gain a clear competitive edge. The future of AI depends not just on speed, but on balancing quality, security, domain expertise, and compliance at scale.

At Viaante, we enable reliable AI development through high-quality data annotation across image, text, audio, and 3D data helping enterprises build production-ready, compliant, and trustworthy AI solutions.